How to install Auto-GPT: Best practices and how to use it in 2025

OpenAI’s Generative Pre-trained Transformer (GPT) language model helps simplify various tasks, including assisting application development like GitHub Copilot. Auto-GPT is one of many tools utilizing the technology.

Auto-GPT is a general-purpose, autonomous Artificial General Intelligence (AGI). Unlike ChatGPT, it automatically breaks down tasks and gives follow-up prompts until the goal is achieved with minimal human intervention.

To use the tool, users must configure it using Git and an API. This tutorial will explain how to install Auto-GPT on a virtual private server (VPS) and share its best practices.

What Is Auto-GPT?

Auto-GPT is a general-purpose, autonomous AI agent based on OpenAI’s GPT large language model. Unlike other AI models, it can automatically generate follow-up prompts to complete tasks with minimal human interaction. Auto-GPT helps simplify various tasks, including application development and data analysis. To use the tool, users must clone the Git repository and integrate the OpenAI API.

Prerequisites for installing Auto-GPT

You need a VPS hosting plan to host and run Auto-GPT in a production environment. The tool doesn’t require much computing power, but your server must be compatible with various software, like:

- Python and pip. The programming language lets your system run Auto-GPT code, while the package manager allows you to install and manage the dependencies.

- Git. This version control system fetches the Auto-GPT files and code from the repository during installation.

- Docker. This tool packages Auto-GPT with its dependencies and libraries as a container, which helps simplify the testing and deployment process.

In addition to supporting all the required software, Hostinger’s VPS hosting service offers full root access to avoid permission-related issues during installation and configuration. We also provide Kodee – an AI assistant tool that helps generate Linux commands, which you can run on your server remotely using our Browser terminal. Plus, we offer templates to install various Linux distributions and applications in one click.

After purchasing and setting up a VPS plan from Hostinger, connect using an SSH client like PuTTY, Terminal, or our Browser terminal. Find the login credentials in the SSH access tab of your VPS overview menu in hPanel.

Once connected, update your system’s package manager. The command differs depending on your operating system. For Debian, Ubuntu, and other derivatives, run:

sudo apt update && sudo apt upgrade

For CentOS, AlmaLinux, and other Red Hat Enterprise Linux (RHEL) derivatives, use the following command:

sudo yum update && sudo yum upgrade

Important! To deploy Auto-GPT on Windows, you can install the required software using executable files or Command Prompt. We recommend configuring a code editor like VSCode for local computers to modify the file easily.

How to install Auto-GPT

After preparing your server, follow these steps to install Auto-GPT on your VPS. Since we will use Ubuntu 22.04, modify the commands as necessary according to your operating system.

1. Install Python and Git

Run the following command to install the Python programming language, pip package manager, the Python virtual environment, and Poetry dependency manager:

sudo apt install python3 python3-pip python3-venv python3-poetry

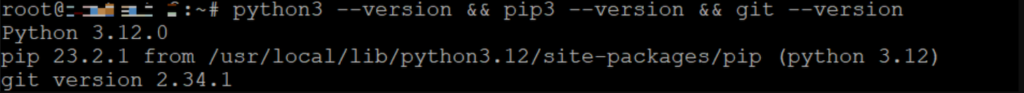

Check whether Python and pip are configured correctly in your computer using this command:

python3 --version&

pip3 –-version

Ensure you install Python version 3.8 or later to meet the minimum requirement. Otherwise, you can download the package manually from the Python release page.

Install the Git version system to fetch the Auto-GPT repository. Here’s the command:

sudo apt install git

Verify the Git installation with this command:

git --version

Now, let’s create and activate a Python environment to ensure you can configure the modules required for Auto-GPT. To do so, run the following commands one at a time:

mkdir myproject

cd myproject

python3 -m venv myenv

source myenv/bin/activate

2. Get the Auto-GPT repository

You can fetch the Auto-GPT files and code from the repository using Git or manually. We will explain both so you can choose based on your preferences.

Cloning the repository via Git

Download Auto-GPT by cloning the repository content using the git command:

sudo git clone https://github.com/Significant-Gravitas/AutoGPT.git

It will automatically create the AutoGPT folder containing all the retrieved files in your current directory.

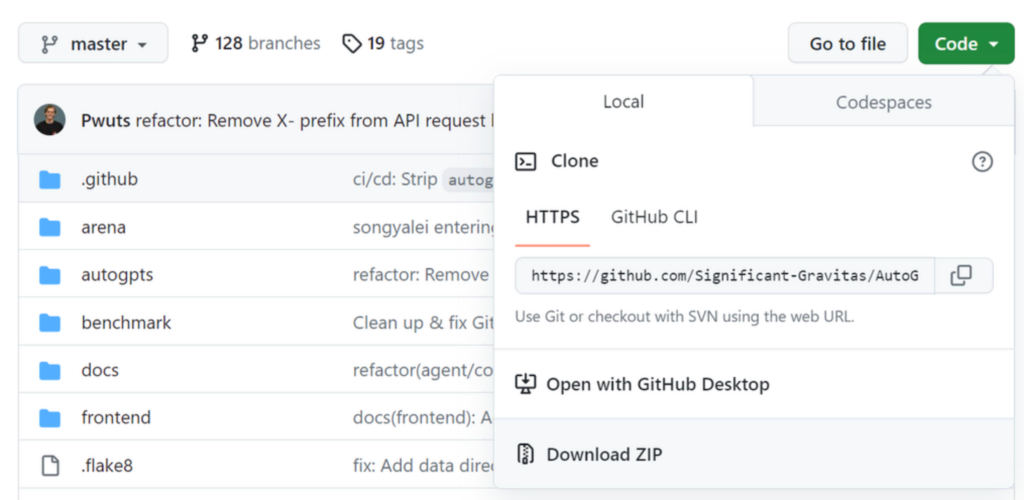

Downloading the repository as a ZIP file

If you want to upload the files manually, open the Auto-GPT official repository on your web browser. Click the Code button and select Download ZIP.

Upload the archive file from your local computer to the remote VPS. For simplicity, we will use the secure file transfer protocol (SFTP). Here are the steps:

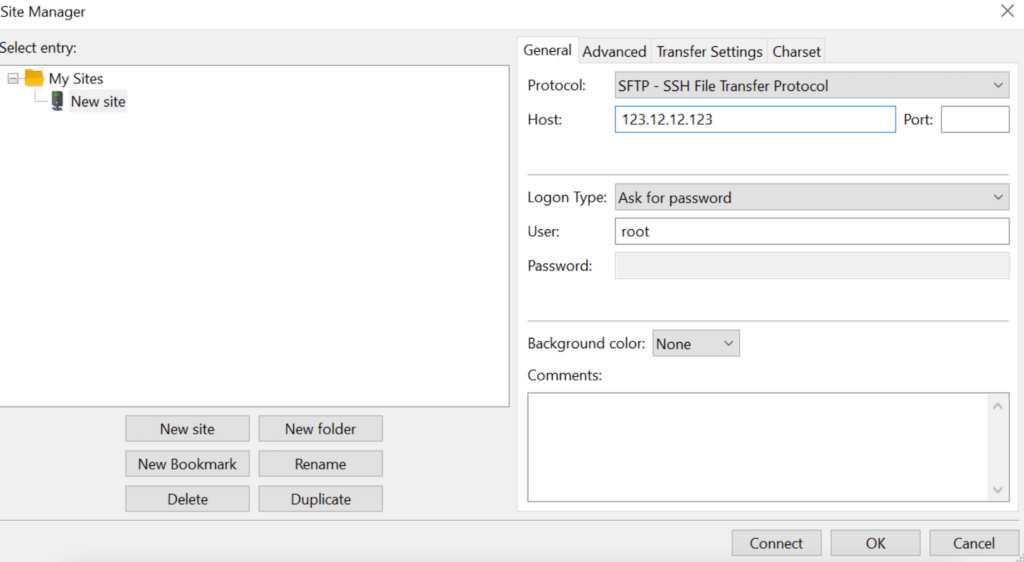

- Download and install the FileZilla client.

- Open the application, then go to Files → Site Manager → New Site.

- Choose SFTP as the protocol and enter all the required information. Click Connect.

- Locate the archive file in your local computer’s download directory. Drag and drop it to the right-hand window.

You can unpack the repository file on your local computer before moving it to the VPS or extract it using the Linux unzip command in the remote server. We recommend the latter since transferring ZIP is more efficient.

3. Install the required packages

Auto-GPT requirements include various Python packages. Instead of installing them individually, call the requirements.txt file to configure all dependencies automatically.

Before doing so, make sure to change your working directory to /AutoGPT by running cd AutoGPT. Then enter the following to start the installation:

pip install -r docs/requirements.txt

If you encounter permission issues, run the command as sudo and add the –user option:

sudo pip install --user -r docs/requirements.txt

Important! You can add the –break-system-packages option if it still doesn’t work. However, we don’t recommend doing so because it might break your system.

4. Configure the OpenAI API Key

Use the OpenAI API key to connect Auto-GPT with the GPT large language model (LLM). Here’s how to do it:

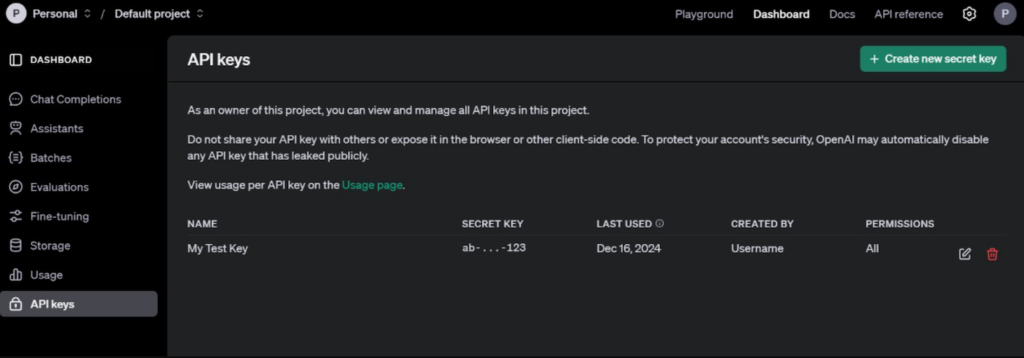

- Make a new account on the OpenAI login page. Skip this step if you have one already.

- Go to the OpenAI API key generation page. Click Create new secret key.

- Enter a name and select your project. Click Create secret key.

- Copy the secret key and store it in a safe location since you won’t be able to view it again. Click Done.

Navigate to AutoGPT/classic/original_autogpt and follow these steps to put the API key in Auto-GPT’s .env file:

- Rename the .env.template file to activate it:

sudo mv .env.template .env

- Open the file using a text editor like nano:

sudo nano .env

- Remove the hash (#) symbol and enter your API key in the OPENAI_API_KEY variable. It will look like this:

OPENAI_API_KEY=abcdef12345

- Press Ctrl + X, Y, and Enter to save the changes.

You can also determine the LLM model for different tasks. To do so, change the SMART_LLM and FAST_LLM values to GPT 3.5 or 4 in the .env file.

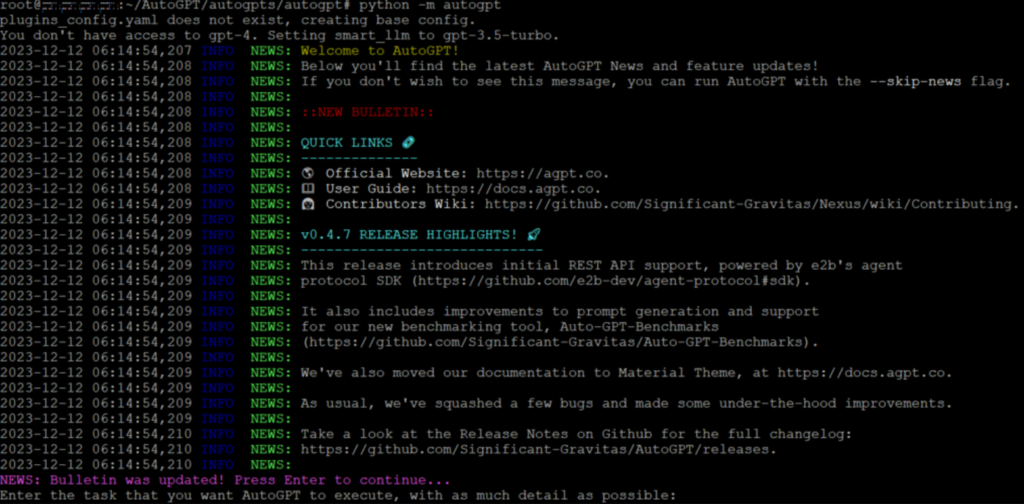

5. Start Auto-GPT

To start the AI tool, navigate to AutoGPT/classic/original_autogpt and enter this command in your command-line interface:

python -m autogpt

Once you see the welcome message, press Enter to start using the tool.

6. Setup Docker (optional)

Use Docker to install Auto-GPT in an isolated, portable environment. It provides a more reliable way to run the tool in the background than a multiplexer like Linux Screen.

After installing Docker on your Ubuntu system, build a Docker image for your project using this command:

docker build -t autogpt .

Run the Auto-GPT container using the command below:

docker run -it --env-file=./.env -v $PWD/auto_gpt_workspace:/app/auto_gpt_workspace autogpt

Alternatively, use these commands if you have already installed Docker Compose:

docker-compose build auto-gpt

docker-compose run --build --rm auto-gpt

Troubleshooting Auto-GPT installation issues

In this section, we will explore several common Auto-GPT installation issues and their solutions.

Missing modules

If you can’t run Auto-GPT and Terminal shows a missing module, check whether you are in the correct directory. You must run the command within the AutoGPT/classic/original_autogpt source code folder.

In addition, it might occur when you misconfigure the dependencies. To resolve it, reinstall the required packages using the pip install -r requirements command.

If other modules are missing, install them using Poetry. After navigating to the AutoGPT/classic/original_autogpt folder, run the following commands:

poetry install

Rerun the autogpt python module and the issue should resolve. If the error persists, use pip to install all the missing modules manually. For example, if Terminal shows Auto-GPT missing a plugin template, enter:

pip install auto_gpt_plugin_template

Meanwhile, if you encounter a missing Git module error, run this command:

pip install gitpython

Missing auto-gpt.json

The Auto-GPT installation process should automatically create an auto-gpt.json file. However, if it doesn’t exist, your command-line interface will return an error as the tool fails to store data.

The simplest way to fix it is by creating the file manually within the Auto-GPT root folder using the Linux touch command:

sudo touch auto-gpt.json

API connection errors

A misconfigured API key might cause Bad Gateway errors. If you don’t have free tokens, ensure you have set up a payment method and billing details in OpenAPI. Otherwise, Auto-GPT can’t connect to the GPT LLM, preventing it from processing your prompts.

If you encounter an invalid key error despite properly configuring the API in .env, try to put the key within Auto-GPT’s configuration file. To do so, enter the key directly into the config.py file within the Auto-GPT/autogpt/config path.

How to use Auto-GPT

Once Auto-GPT runs, describe your task, and the tool will assign an AI agent with specific roles and goals.

For example, if you ask Auto-GPT to “write content for an article about AI,” it will assign EditorGPT, whose role is to help writers create high-quality content. You can also enter various AI prompts for VPS management to generate instructions for server administration.

Enter follow-up or confirmation inputs based on the instructions, which might differ depending on the agent. For a new Auto-GPT user, we recommend reading our AI prompt engineering tutorial.

For specific tasks, you can add the following options to the python command to run Auto-GPT:

- –speak. The agent will provide an audio output using text-to-speech.

- –continous. Auto-GPT will run the task autonomously without user authorization.

- –self-feedback. The tool will automatically provide feedback by verifying its actions based on the task.

Warning! Auto-GPT doesn’t recommend using the --continuous and --self-feedback options as they can lead to unexpected behavior.

Moreover, you must configure additional API keys for tasks beyond text generation. For example, integrate Dall-E to create images and ElevenLabs for text-to-speech functionality.

Auto-GPT best practices

In this section, we will explain Auto-GPT best practices to help you understand how to efficiently use AI technology in your website or application development.

Configure Auto-GPT for specific tasks

Customizing Auto-GPT settings lets you configure the tool specifically for your task. You can do so by determining the agent’s role, name, and goal in a YAML file.

This file lets you consistently run the AI tool for specific tasks without repeatedly specifying the roles and purpose. For example, we will create a writing agent configuration:

nano writer_ai_settings.yaml

Enter the AI name, role, and goal. These vary depending on your tasks. Here’s an example:

ai_name: WriterGPT

ai_role: An AI assistant designed to research and write an engaging article to draw readers.

ai_goals:

- Research the article content to ensure validity.

- Create article structure.

- Write engaging written content for a website to draw readers.

To apply the configurations, call the file using the –ai-settings option when running Auto-GPT:

python -m autogpt --ai-settings writer_ai_settings.yaml

To set the file as default, enter its name in the .env file’s AI_SETTINGS_FILE variable. You can also set prompt configurations by creating a similar file and calling it in your command using the –prompt-setting option.

Use custom scripting for enhanced functionality

Expand Auto-GPT features and data sources using custom scripts called plugins. For example, you can install the Wikipedia plugin to enable the tool to retrieve data from the website.

It’s possible to create your own using the _init_.py template or install pre-configured plugins from their repositories. Since the configuration steps slightly differ depending on the plugin, ensure to check the instruction manual.

Typically, you must extract the files into Auto-GPT’s plugin folder and add the plugin to the .env file.

Optimize memory usage for handling large datasets

Users can cache historical Auto-GPT interactions to optimize data handling and enable adaptive prompt creation based on context. For low-performance systems, it helps the AI tool run optimally when conducting complicated tasks.

A popular way to do this is to set up the Pinecone vector database using API. Alternatively, you can use Redis, which is included with Auto-GPT if you install the tool using Docker Compose.

To set it up, run this command to create a Redis container:

docker run -d --name redis-stack-server -p 6379:6379 redis/redis-stack-server:latest

Enter the following settings in the .env file. Replace the password with your own:

MEMORY_BACKEND=redis MEMORY_BACKEND=redis REDIS_HOST=localhost REDIS_PORT=6379 REDIS_PASSWORD="PASSWORD"

Integrate Auto-GPT with other APIs

Integrating Auto-GPT with other platforms lets you expand the tool’s functionalities. For example, you can enable image generation in the AI tool using Dall-E or Stable Diffusion.

Meanwhile, use ElevenLabs or Microsoft Azure Text-to-speech API to enable voice output. For automatic speech transcription, use Hugging Face.

If you want to simplify AI application development, integrate Auto-GPT with LangChain. To use these tools, retrieve their API keys from the respective websites and put them in the .env file.

Conclusion

Auto-GPT is a general-purpose, autonomous AI agent for various tasks like application development and data analysis.

In this Auto-GPT installation guide, we have explained the steps to set up the tool in a VPS environment:

- Install Python and Git. Download the latest version of Python and Git using your operating system’s package manager command, like apt or yum.

- Download the Auto-GPT repository. Duplicate the official Auto-GPT repository using the git clone command or by uploading the ZIP file via SFTP.

- Install the dependencies. Run the pip install command to download and install the packages listed in the requirements.txt file.

- Set up the OpenAI API. Create a new OpenAI account and generate an API key. Rename the .env.template file and enter your secret key using a text editor.

- Start Auto-GPT. Enter the python -m autogpt command to launch Auto-GPT.

- Set up Docker. Install Docker, create a Docker image, and run the Auto-GPT service container.

If you encounter an error, ensure you have the auto-gpt.json file and all dependencies. Also, check whether the python command runs within the root Auto-GPT folder.

To use the tool efficiently, store the agent configuration in a YAML file, integrate other tools with API keys, and install plugins according to your tasks. Also, configure Pinecone or Redis for Auto-GPT performance optimization.

How to install Auto-GPT FAQ

Can you use Auto-GPT for free?

While Auto-GPT is free and open-source, OpenAPI charges you for each API call and inputted word. For example, the GPT 3.5 Turbo input costs $0.0010 for 1,000 tokens or around 750 words. For more information, refer to the OpenAI pricing page.

What are the limitations of Auto-GPT?

Auto-GPT can’t convert a series of actions into reusable functions. It results in inefficiency when the user wants to restart their task from the beginning.

It also inherits the GPT LLM limitations. For example, GPT 3.5 is prone to produce misinformation and can only gather information up to January 2022.

What are the future trends for Auto-GPT?

Auto-GPT API integration and learning capabilities make the tool versatile, allowing you to automate tasks from start to finish.

In the future, it could develop another AI application with the LangChain framework. Also, it may help users resolve live support calls by providing solutions in text-to-speech.

Comments

November 25 2024

Weird, I'm seeing quite a different directory structure in my AutoGPT folder. The env.template file seems to reside in AutoGPT/classic/original_autogpt and there's no OPEN_API_KEY variable in it.

December 04 2024

Thanks for pointing that out! We’ll update the article to reflect this. You’re right—the

env.templatefile is in theAutoGPT/classic/original_autogptfolder, and the variable is actually OPENAI_API_KEY, not OPEN_API_KEY. The repo gets updated regularly, so it’s possible the structure changed recently. Appreciate your feedback :D